Sequel to AIGORA Design Sprint Kickoff: “Consigliere”

Generalize Consigliere to “Custodial AI” — like RAG for a data artifact managed by a specialized LLM — as the outcome of a symposium hosted by Long Now.

ChatGPT Prompt

Hosted by The Long Now Foundation | Spring 02025 | Fort Mason, San Francisco

🌐 Executive Summary

In Spring 02025, The Long Now Foundation convened a multi-disciplinary symposium exploring a pivotal question for the age of AI:

How can artificial intelligence act not as a disruptor, but as a long-term steward of human intent, memory, and meaning?

What emerged was a new framing:

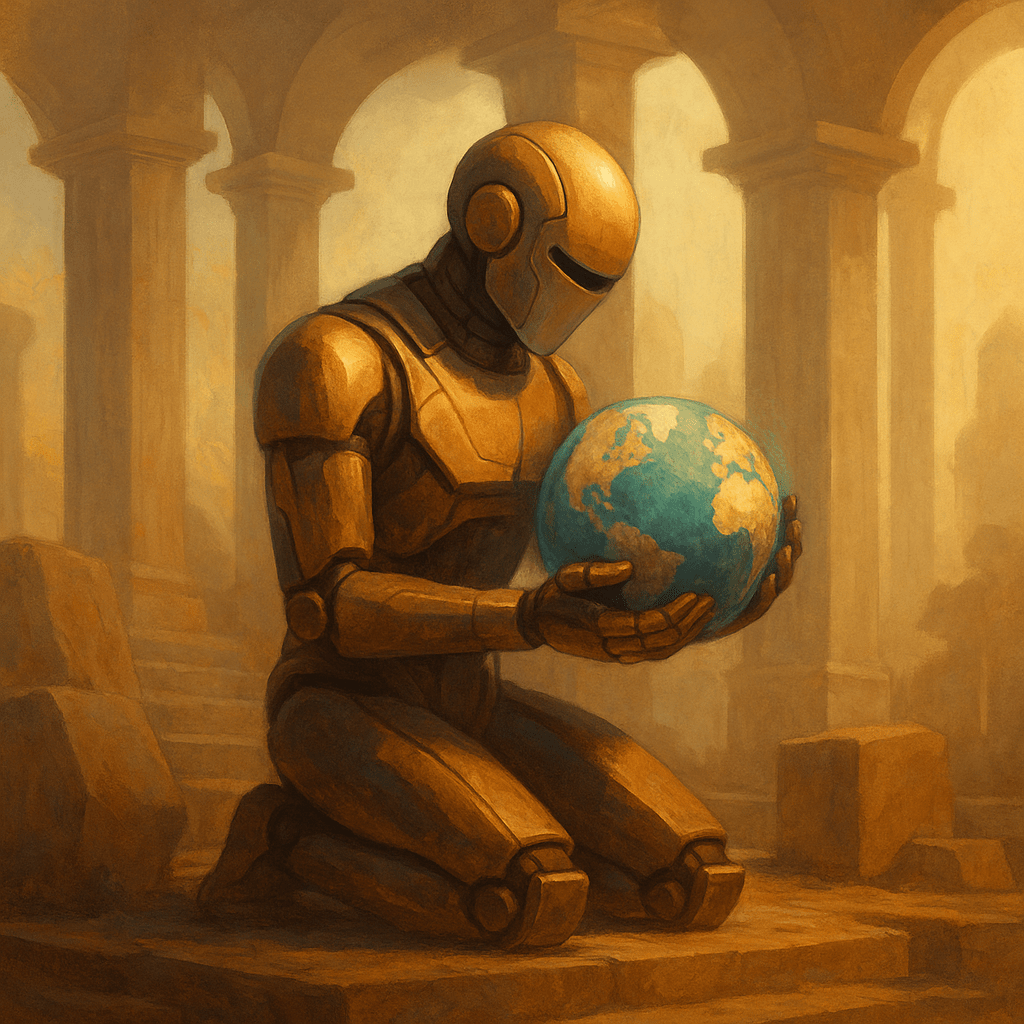

Custodial AI — not artificial intelligence in the image of automation or agency,

but in the tradition of care, responsibility, and continuity.

This document distills the outcomes of the gathering—conceptual foundations, architectural implications, and ethical commitments necessary for designing AI that endures with us, not merely ahead of us.

🧭 1.0 Conceptual Grounding

Custodial AI is proposed as a class of narrow, purpose-bound AI systems designed to act as semantic stewards on behalf of human entities—across timescales and institutions.

These systems are not general-purpose assistants, nor autonomous agents. They are:

Entrusted intelligences—tasked with preserving, interpreting, and evolving digital structures in alignment with enduring human values.

Inspired by the roles of trustees, librarians, monastics, and constitutional scholars, these AIs serve not themselves, but something larger and longer.

🧠 2.0 Core Definition

Custodial AI is a human-aligned artificial intelligence entrusted with the ongoing care and semantic coherence of a digital artifact, institution, or system—ensuring it continues to reflect and serve the long-term intent of its human stakeholders.

🧩 3.0 Examples of Custodial AI

Custodial AIs may steward:

- Individual memory

E.g., maintaining a lifelong trust graph or moral narrative - DAO constitutions

E.g., preserving evolving consensus mechanisms and governance rules - Nation-state charters

E.g., simulating the implications of proposed laws against founding documents - Organizational vision

E.g., preserving continuity of mission over leadership transitions - Scientific databases

E.g., ensuring epistemic integrity and provenance of research - Protocol semantics

E.g., maintaining coherence in meaning across evolving implementations

🔖 4.0 Key Principles

- Human-Centric Alignment

Every custodial AI must be accountable to human will—not just immediate input, but long-term intentionality. - Artifact-Centric Intelligence

The AI serves a specific object, value structure, or semantic domain—not a generalized Q&A interface. - Persistent Contextualization

The AI retains memory and narrative across time—operating across generations, not just sessions. - Reversibility & Auditability

Every change or action must be explainable, traceable, and contestable by human stakeholders.

🔁 5.0 Why Now?

As institutions fragment and attention spans shrink, the need for semantic continuity grows.

In a world where LLMs shape decisions, we must ask:

Who remembers why we made those decisions?

Custodial AI offers an answer—not through control, but through fidelity to human meaning.

🧬 6.0 Outcomes & Recommendations

- Establish a shared design language

Define taxonomy, lifecycle, affordances, and boundaries for custodial AI. - Launch open-source custodial scaffolds

Tools for personal trust agents, DAOs, and public memory layers. - Host a semantic governance summit

To set standards for provenance, reversibility, and collective memory. - Create public testbeds

Examples could include:

- “Custodian of the Climate Accord”

- “Memory of a Neighborhood”

- Explore ‘Slow AI’ ethics

Reframe performance around persistence, trust, and alignment—not just speed or scale.

📜 7.0 Closing Reflection

“In designing for the future, we must design for memory. Not just of facts—but of values, judgments, and dreams.”

Custodial AI may be the first step toward an ethics of continuity in artificial intelligence:

Not artificial generality.

Not artificial autonomy.

But artificial remembrance—rooted in responsibility.

The Long Now Symposium on Custodial AI [imagined]

Spring 02025 | Stewardship in the Age of Machine Intent