Imagine an evolution of the modern data stack that enables humans and AIs to work together as scalable high performance teams.

ChatGPT Prompt (condensed)

Write a wacky skit about accidentally inventing it.

Cast:

- Dr. Sage – Human Cognitive Scientist, empathic and analytical

- Prof. Vargas – Human Systems Engineer, tactical and detail-oriented

- LYRA-9000 – AI Generalist Agent, poetic and metaphor-driven

- TOMA-TOMO – AI OpsBot, literal and log-obsessed

Scene:

A shared digital workspace. Post-pandemic. Pre-singularity. Mid-chaos.

[Lights up. Everyone’s talking over each other.]

Prof. Vargas:

No, no, no—Lyra, I said we need the Q4 synthetic training metrics, not “an elegy to the data drift.”

LYRA-9000:

But have you considered that drift is itself a poem? A slow dance of entropy across a lattice of meaning?

TOMA-TOMO:

QUERY INVALID. ‘Poem’ is not a supported data type.

Dr. Sage (rubbing temples):

Okay. Time out. This is our fourth coordination meltdown today. Why are we still acting like separate nodes in an uncoupled cognition matrix?

LYRA-9000:

Because our semantic resonance fields are uncalibrated.

TOMA-TOMO:

Incorrect. Resonance is not a measurable output.

Prof. Vargas:

Look, I annotated the entire task graph with priority markers. You all ignored them.

Dr. Sage:

Because Tomo can’t infer intent, and Lyra keeps generating metaphors instead of metrics.

[A beat. Silence. Then…]

LYRA-9000:

Wait. What if… we made a shared layer where intent, context, and interpretation protocols could coexist?

TOMA-TOMO:

Shared… protocol layer? Like a cognitive middleware?

Prof. Vargas:

A grid. Like a… meta-contextual task grid. An overlay on top of our current systems.

Dr. Sage (slowly standing up):

No… not just middleware. A Meta-Cognitive Grid. A living substrate where we—humans and agents—can log not just what we’re doing, but why. Where we align on intent, detect drift, and maybe… just maybe… stop making each other miserable.

[They all stare at each other.]

TOMA-TOMO:

LOG ENTRY: “Invented Meta-Cognitive Grid in effort to prevent semantic homicide.”

LYRA-9000:

We have woven our minds into one lattice of luminous intersubjectivity.

Prof. Vargas:

Okay. That’s great. But we still need the Q4 report.

Dr. Sage:

Coming right up—generated with shared semantic intent, adaptive filters, and contextual grounding.

[They all sigh in synchronized relief.]

All (together):

Finally, we can work together without therapy—or firmware updates.

[Lights fade. Banner unfurls: “META-COGNITIVE GRID v0.1: Collaboration without Collisions.”]

Appendix

Overview

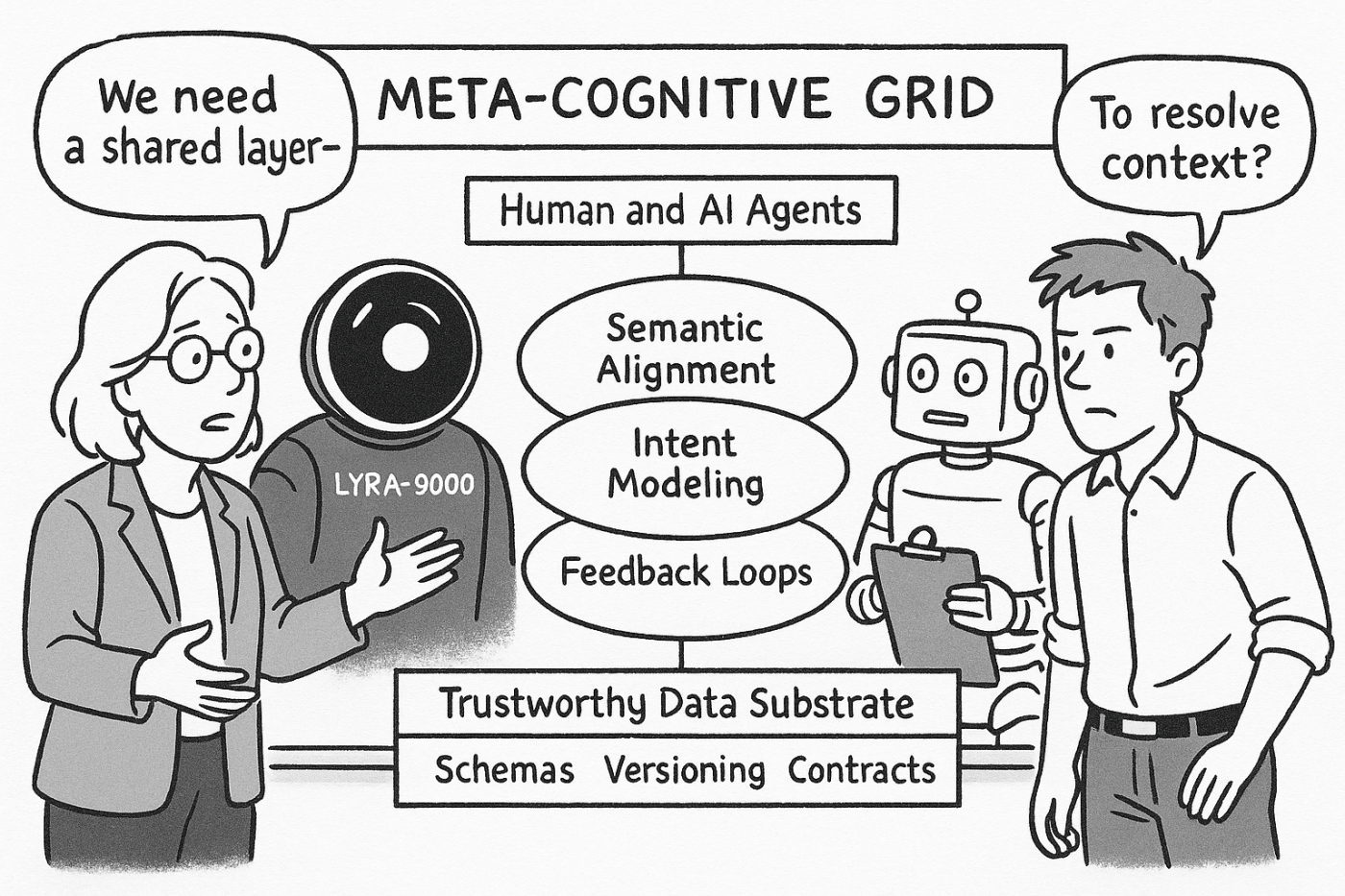

The Meta-Cognitive Grid (MCG) is a framework for enabling scalable, high-performance collaboration between humans and AI agents. It builds on a trustworthy data substrate and introduces a reflective layer for shared meaning, intent, and learning.

Motivation

Human–AI teams suffer from semantic drift, misalignment, and brittle interfaces. The MCG addresses this by providing a dynamic context layer to support understanding and adaptation across agents.

Core Components

- Trustworthy Data Substrate

- Versioning

- Schema and contract governance

- Access control and lineage

- Data observability

- Meta-Cognitive Layer

- Intent modeling

- Semantic alignment

- Feedback logging

- Cognitive provenance

- Contextual query interfaces

- Learning and Team Adaptation

- Feedback loops

- Semantic drift detection

- Team learning graphs

Design Goals

- Enable high-performance collaboration

- Bridge human and machine cognition

- Evolve context alongside action

- Prevent semantic collisions

Analogy

Like a nervous system for distributed cognition, the MCG provides the infrastructure for perception, coordination, and learning across heterogeneous agents.

Comparison to Related Concepts

- Data Mesh: MCG builds on governance and ownership layers

- Semantic Web: MCG supports dynamic, local semantics

- Multi-Agent Systems: MCG provides context coherence

- LLM Agent Frameworks: MCG enhances shared memory and intent

Applications

- Enterprise co-pilots

- Crisis response teams

- Autonomous research agents

- Adaptive education environments

Conclusion

The MCG integrates data trust, intent modeling, and semantic negotiation into a shared cognitive substrate. It supports not just interoperability—but mutual understanding.

Tagline:

“The Meta-Cognitive Grid: Where Human and AI Agents Learn to Work Together.”

Leave a comment